Data lakes are powerful but prone to becoming “Data Swamps”

Data lakes start as a brilliant solution to a modern problem—managing the mountains of data businesses generate every second. They’re flexible and scalable, making it easy to store all kinds of data, structured or not. Unlike traditional systems, data lakes offer schema-on-read, which means you don’t need to define how the data is used before storing it. This is a huge advantage, especially in fast-moving industries where use cases change constantly.

Without rules or structure though, a data lake turns into a dumping ground. Teams toss in unstructured, low-quality data without thinking about how it fits the bigger picture. Soon, the data becomes unreliable. No one knows what’s useful, where it came from, or how to use it. This disorganization leads to skyrocketing costs for storage and computing. Compliance issues pop up, and you end up with a mess no one can untangle.

In the 2010s, companies flocked to data lakes, excited by their potential. What they got instead was a textbook example of the “Tragedy of the Commons.” Shared resources—like these data lakes—were overused and under-managed, losing all value.

“A data lake is only as good as the structure and strategy behind it. Without clear oversight, these lakes will sink into swamps, drowning businesses in costs and confusion.”

Security data lakes face unique challenges

Security data lakes are a beast of their own. Enterprises use between 40 and 100 security tools, each generating massive amounts of data in formats no one else can easily read. These tools operate like solo artists in a symphony, creating data silos instead of harmony. A centralized data lake sounds like the perfect solution—pull it all together, make it searchable, and gain insights. But sadly, it’s not that simple.

What happens instead? Chaos. Security data is diverse in quality and purpose. You’ve got data for production needs, experiments, audits—you name it. When there’s no structure, finding the right piece of information becomes a nightmare. Picture hunting for a critical alert buried under hundreds of files labeled “Critical SOC Alerts.” Even worse, the data you finally dig up might be outdated or unreliable. Who’s responsible for fixing it? No one knows.

Now, looking at the costs. The more data you pour into a mismanaged lake, the higher the compute and storage costs. Compliance is another major drain here too. If you don’t know what data you have, you can’t protect it. That’s a problem in an age where regulations are strict and breaches are expensive.

Proactive strategies keep data lakes clean and functional

A clean, efficient data lake doesn’t happen by accident. You need a game plan. First, don’t treat your lake like a junk drawer. Define what goes in and why. Focus on outcomes—what decisions or insights do you want to drive? Randomly dumping data in, hoping it’ll be useful later, is a recipe for disaster.

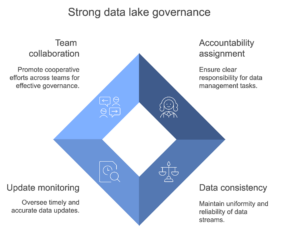

Next, put someone in charge. Actually, put multiple people in charge. Strong governance shouldn’t stop with who can access the lake. You need to assign clear accountability for every piece of data. Who makes sure a log stream is consistent? Who monitors updates? Collaboration across teams is key and shouldn’t be a solo act.

Strong data lake governance

Now let’s look at metadata, as it’s more important than many think. Use consistent naming conventions and file structures so everyone knows what’s what. Take it a step further with data lineage, which lets you track where data came from, who owns it, and how it’s used. This makes it easier to answer questions, validate datasets, and keep the lake from becoming a swamp.

Mismanaged data lakes become liabilities

A poorly managed data lake is both a missed opportunity and a liability. You end up with runaway costs, data you can’t use, and systems that slow everything down. Worse, you’re opening yourself up to regulatory fines and security risks. If you don’t know what data you have, how can you protect it from breaches or tampering?

A messy lake compromises your visibility. You can’t detect threats effectively, meet compliance standards, or make confident decisions. Data lakes should be an asset, but when mismanaged, they drain resources and create more problems than they solve.

The solution is straightforward but requires discipline: set clear strategies, enforce governance, and pay attention to metadata. These are best practices, and should form the foundation of a data lake that actually works.

Final thoughts

Are you treating your data as a strategic asset or just another cost to manage? The future belongs to brands that see clarity where others see chaos. Is your data lake fueling breakthroughs or bogging you down? Take a careful look at your data, find the weak spots, and take action.